Fly the great big sky, see the great big sea

Kick through continents, bustin’ boundaries

Take it hip to hip, rock it through the wilderness

“Roam” – The B-52s

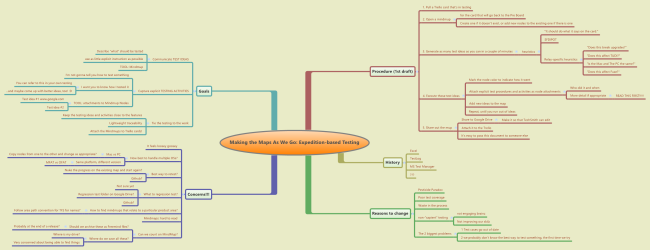

Ok, first, three weeks ago, I talked about a desire to throw out the last of our Factory-style test cases in favor of some kind of more lightweight documentation.

Then, two weeks ago, I presented a rough draft recipe for doing so, using MindMup and Trello.

Now we’re two weeks in. What have we learned?

Making The Maps As We Go

As I mentioned in my previous post, I’m adapting xBTM to my context. xBTM, as I understand it, is basically a combination of Session-Based and Thread-Based testing where you choose which of the two you want based on the complexity of your task and your work environment. What my team is doing largely generates the same test artifacts as xBTM seems to do, but our procedure is actually turning out somewhat different.

As before, a high level of the testing part (as opposed to the test management part) looks like this:

- Generate as many test ideas as you can and put them in a mindmap

- Execute those ideas and annotate the mindmap, attaching reports of your test actions to the ideas

- Use what you’ve learned to update the mindmap with additional test ideas

Repeat the last two steps until you run out of ideas.

Having done this for a couple of weeks (and getting some others to participate with me), what makes this really interesting is the flexibility and adaptability of the mindmap. You start with a really roughed-in plan, and then you make the map as you go. It’s real-time software cartography, as Aaron Hodder nicely put it on Twitter. The map helps build you a mental model of the test space while you’re working, and then it also provides a map for others to see where you went and what you saw.

Expeditionary Testing: An Anecdote

We made a change to our product’s silent (“unattended”) installer during this process. I started my testing by generating a mindmap and blasting out a large number of tests I wanted to run using the SFDIPOT heuristic. I started to do some testing, but it turned out that the change was not working at all. I attached the mindmap to the card for the task and worked on something else.

After the issue was fixed, someone else (a senior dev) picked up the mindmap and went through it, testing as he went. He marked some nodes red, some things green, and added a couple of ideas. The red nodes (bugs) were things that he could work on, so he went ahead and fixed them, checked them in and went home for the night.

The next day, the testing task got picked up by a junior developer. She had no idea how to test our silent installer but she understood the test ideas: a couple of minutes of training and she was off and running. About an hour later, she came back with showstopping bugs. Here’s the interesting thing about these bugs: they were not anything the previous dev tested, and the tests that found them were not previously on the mindmap. She looked at the mindmap and saw a gap in coverage, despite having no previous experience testing this feature, then she added the nodes to the map, tried them out, and found bugs.

So that was pretty rad. I see this as a big strength of this technique: those participating in testing projects can witness the analysis behind the test ideas, and contribute to it, in an obvious and frictionless way. If I had handed over a list of 12 explicit test cases, instead of a mindmap with 12 test ideas, the gap in coverage would not have been detected. The poor souls running those test cases would have been racing to finish them before the suicidal urges started bubbling up. The mindmaps enable a two-way conversation: I made a map of where I went, and you can add to it when you go places I didn’t go. Realtime software cartography… expeditionary testing.

Selling It To The Team

Having shown it one-on-one to pretty much everybody on the team, I held an meeting to discuss the philosophy behind this new method, and gather everyone’s concerns.

Maybe not all that surprisingly, no one was sticking up for the Factory-style test cases. People don’t like writing them or executing scripted test cases, and they saw the benefits of the expeditionary approach firsthand.

What did surprise me was the amount of concern around the management of the test ideas and results. We rarely go back and look at old test results, so I was surprised that the chief concern people had was “how are we going to pull these out of mothballs and look at them once the Trello cards are archived?” and as a corollary, are we worried that MindMup as a service might be unavailable when we need it? These are valid concerns, and I’m not sure how best to address them. It may be that we end up using some more robust tool (XMind?) where we can store the results internally instead of in the cloud.

The experiment continues. I was encouraged to see this post from Jason Coutu expressing similar results using mindmapping in this expeditionary fashion… if you are using mindmaps in this way (or some other way with regards to testing and test management), please share your experiences!

-Clint (@vscomputer)